Over the past years, we have seen a proliferation of vulnerabilities affecting many organisations.

Vulnerabilities are challenging to find in an environment lacking a risk-scored asset register.

So what is the challenge in addressing vulnerabilities as they come?

Many factors:

- Fixing application and cloud security vulnerabilities in the first instance is challenging and requires a plan.

- Usually, when first assessed, a system, repository, or library has tons of vulnerabilities, and the task seems overwhelming.

- When deciding what to fix, the scanner does not return contextual information (where the problem is, how easy it is to exploit, how frequently it gets exploited)

- Operational requirements often demand a very specific time window to maintain operational efficiency and SLA uptime.

- Fixing bugs and upgrading applications and cloud security systems is always fighting for resources against creating new value and products.

- Usually, vulnerabilities get detected at the end of a production/development lifecycle (when the system needs to be pushed to production). Security assessments usually cause a lot of pain as changes are very complex and painful at this stage.

So what is the solution to all this? Having a crafted plan and objectives to fix more urgent vulnerabilities first.

But how do we define which vulnerabilities are more urgent? Cybersecurity vulnerabilities in the modern stacks are complex to evaluate as they could affect different parts of a modern technology stack:

- Vulnerabilities in code for application security

- Vulnerabilities in Open source libraries for application security

- Vulnerabilities in Runtime environments (like java) or libraries for a runtime environment (like log4j) for application security

- Vulnerabilities in Operating systems for infrastructure and cloud security

- Vulnerabilities to Containers for infrastructure and cloud security

- Vulnerabilities to Supporting Systems (like Kubernetes control plane) for infrastructure and cloud security

- Vulnerabilities or Misconfiguration in cloud systems for infrastructure and cloud security

So how to select amongst all of them? Usually, the answer is triaging the vulnerabilities. Normally, those triaging the vulnerabilities are security champions collaborating with the development or security teams.

Security teams usually struggle with time and scale and have to select amongst tons of vulnerabilities without much time to analyse threats and determine which vulnerabilities are more at risk.

The answer to this problem is usually never simple; it often requires risk assessment and collaboration with the business to allocate enough time and resources to plan for resolution and ongoing assessment. This methodology has been proven to be one of the most efficient methodologies to tackle vulnerabilities at scale (https://www.gartner.com/smarterwithgartner/how-to-set-practical-time-frames-to-remedy-security-vulnerabilities)

The risk and severity of vulnerabilities are very different but are often used interchangeably.

- Risk = probability of an event occurring * Impact of an event occurring

- The severity usually is linked to CVSS analysis of the damage a vulnerability could cause

What’s wrong with risk assessment of vulnerabilities as it stands?

- Not Actionable risk – Risk scoring is usually based on CVSS base and/or temporal score. Those scores are not the true reflection of the risk in either cloud security or application security.

- Not Real-Time – Risk scoring is traditionally static or on a spreadsheet assessed and updated based on impact and probability analysis, but those values are often arbitrary and not quantifiable. Modern organisations have a dynamic landscape where the risk constantly changes every hour.

- UN-Contextual – Vulnerability base severity provides a base severity that needs to be tailored to the particular environment where that vulnerability appears. That is particularly complex to determine manually.

- Static Threat intelligence – Organisations consider threat intelligence a separate process when triaging and assessing vulnerabilities. Triaging vulnerabilities by looking at threat intelligence is usually delegated to individuals prone to miscalculation and risk. Moreover, if not curated for specific assets, threat intelligence alerts can be expensive but lead to very little intelligence.

- Not Quantifiable – Risk that is based on data and in real-time is quantifiable. Risk assessed manually by individuals based on opinion is quantitative, not qualitative.

- Not Actionable – Risk scoring and tracking is usually done offline or on a spreadsheet and reviewed quarterly. That can lead to confusion.

The Landscape

The Vulnerability Context and Landscape

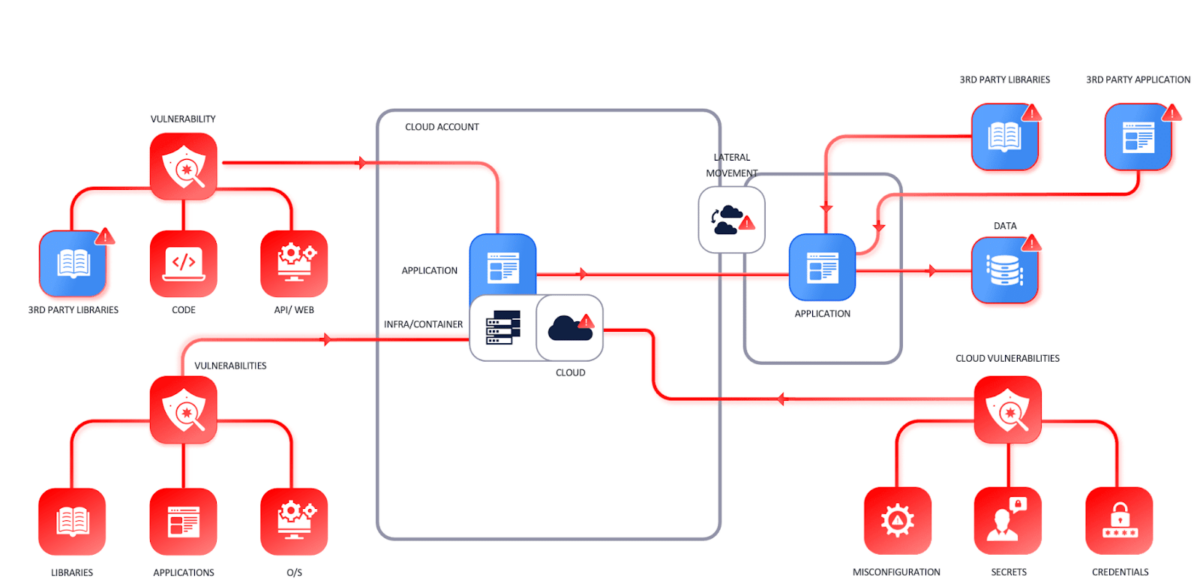

The asset landscape of modern organisations is complex and widespread. Organisations build applications dynamically by putting together internal code, libraries from 3rd parties, open-source operating systems and software into containers deployed in the cloud.

We can break down the assets that organisations leverage in the following macro-categories:

- Runtime: Where software or applications run/execute (cloud security or infrastructure security)

- Operating systems (in data centre servers, in cloud workloads)

- Container systems (in the data centre, in the cloud)

- End-user computing (laptops, mobile devices)

- Software artefacts (application security)

- Build software: an application built by the organisation and/or purchased (e.g. software like adobe).

- Software asset: libraries, compiled code and similar partially built software that can’t be run on its own but forms part of a build file

- Code: pure code files that need to be compiled

- Open source software/libraries: code built by the community that can be used (to a degree of freedom dictated by the licence)

- Software on endpoints or container systems that are fully compiled and is written/purchased from a 3rd party

- Website/Web API (application security)

- Frontend websites

- Web libraries or servers that enables web applet to run

The vulnerability landscape in modern organisations is complex; we can categorise vulnerability types or security misconfigurations into several categories. Vulnerabilities in the various categories have different behaviours and require different levels of attention.

We can categorise asset’s vulnerabilities into the following categories:

- Application Security – Vulnerabilities in software, 3rd party libraries, and code running in live systems

- Infrastructure Cloud security-related – Vulnerabilities that concern images or similar infrastructure systems running in the cloud

- Cloud security – Misconfiguration of cloud systems (Key manager, S3)

- Network security / Cloud security – Vulnerability affecting network equipment like WAF, Firewall, routers

- Infrastructure security – Categorised as everything that supports an application to run that is, traditionally Server, Endpoints, and similar systems

- Container vulnerabilities are derived from either the image in a registry/ deployed or the build file that composes them.

The systems used to measure the security posture and the security health of different elements that compose our system are quite wide, illustrated in the picture below.

The resolution time and SLAs are fairly different between asset types across the various categories.

Vulnerability Tooling Landscape (note RAST is often referred to as RASP)

A new vision for risk assessment of vulnerabilities

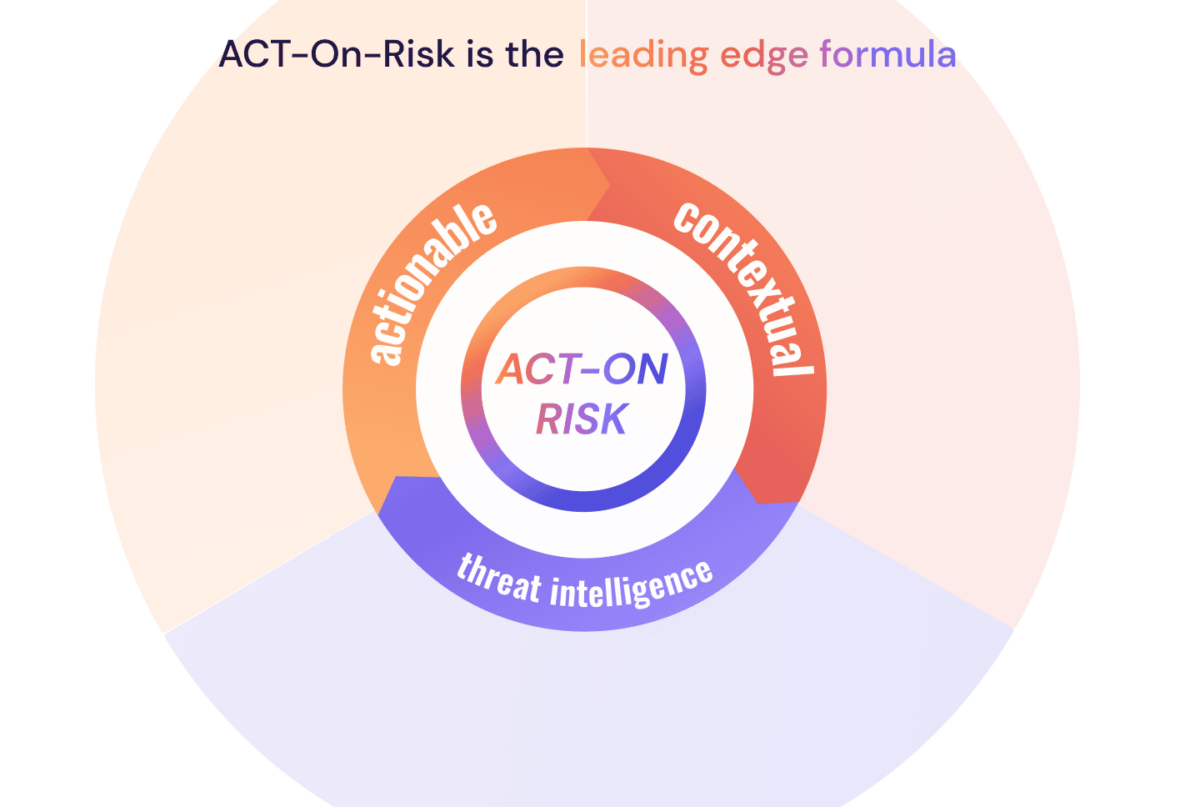

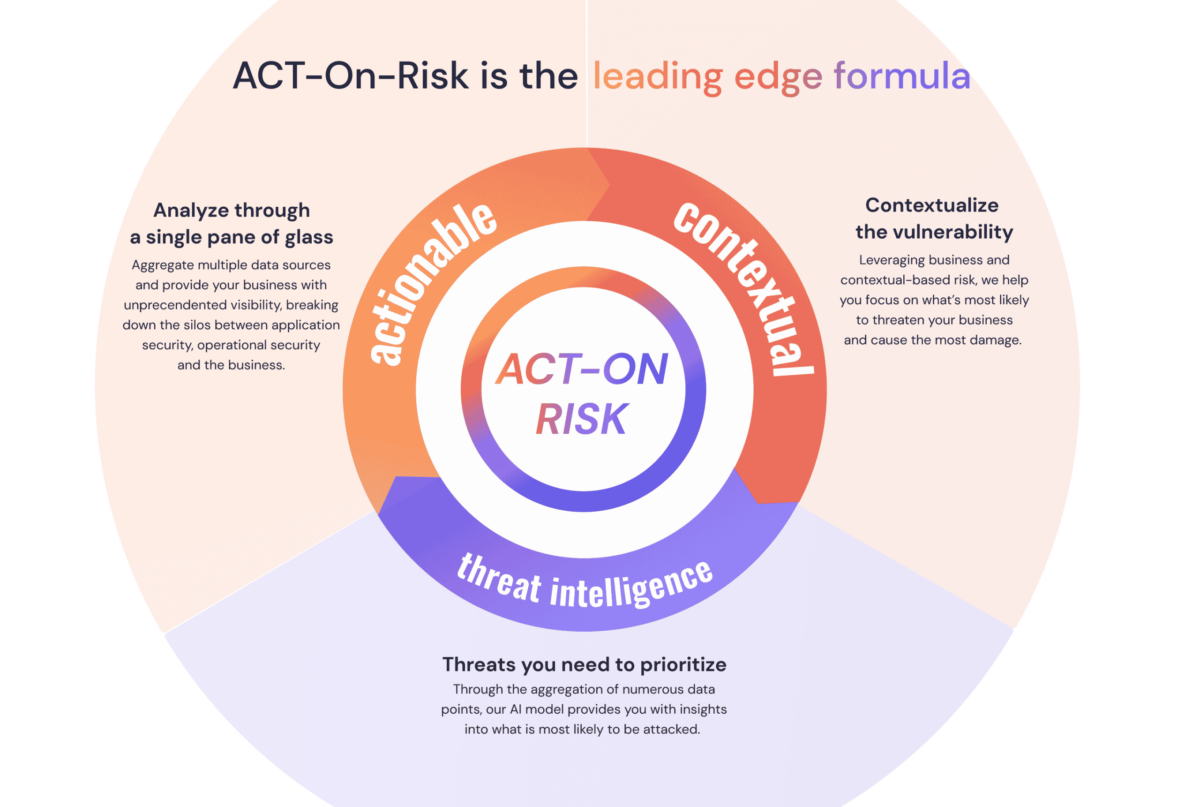

In response to the risk issues mentioned above, we created ACT-ON Risk ®.

This is a new way of performing risk assessment programmatically and dynamically, which is much closer – and derived from – DevSecOps methodologies.

How is modern Risk Calculated, and WHY

Phoenix Security created the “ACT-ON(™) Risk” assessment methodology with modern concepts and based on the following Pillars:

- Actionable – lastly but not least, risk should be actionable and should lead to remediation. Remediation should also dynamically influence the risk.

- Contextual – risk should be contextual and consider business factors like impact, criticality, data impacted, and how much damage an attack could cause

- Threat Intelligence – threat intelligence should influence the risk scoring and be actionable. In this way, the risk is automatically updated in line with the shifting landscape of threats that affect organisation day by day

- On-time/real-time – risk should be real-time and influenced in the same way modern organization are being built and where they are built

- quaNtifiable – risk should be quantified and based on data. There can be a qualitative opinion on risk, but the risk profile of an application should be based on precise data

Risk calculated in this way is dynamic and reflects the true picture of modern organisations’ risk profile and exposure.

Remediation times and actions can be based on this form of dynamic risk as those times would automatically take into consideration the following:

- Contextual Changes – Dynamically adapt to changes in the applications

- External Threat – Changes in threat intelligence and vulnerability changes

- New elements being introduced

Risk is multi-layer and dimension multi assets

Risk is calculated in multiple tiers with different factors influencing the risk score at different levels.

At the individual vulnerability level, we consider

- Exploitability in real time of vulnerability – We analyse several sources to determine whether the vulnerability is easily exploitable.

- Probability of exploitation – It’s calculated leveraging real-time monitoring of several honeynets that capture the information from the web.

- Probability of exploitation – Relevant information from Dark Web sources is taken into account as well.

- Probability of exploitation – Chatter: this factor monitors the probability of exploitation based on the chatter level and AI-based Sentiment analysis on social media channels like LinkedIn, Twitter and other forums like Reddit, Pastebin etc…

- Probability of exploitation – Industry: this factor determines the likelihood of exploitation based on information from other companies in the same industry

- Probability of exploitation – Contextual visibility: this factor determines how visible the vulnerability is from an attacker’s point of view, based on context and external scanning visibility

At the asset level, a different set of factors is taken into account:

- Locality: This factor reflects an asset’s “logical position” and measures whether the asset is fully external, entirely internal or somewhere in between. Here we leverage the aggregation of assets into groups and derive each asset’s locality based on its group.

- Impact: another important contextual element is an asset’s level of impact (or criticality), which would be directly related to the criticality of the service or application to which the asset belongs.

- Density: when aggregating vulnerability risk into higher groups – like an asset – one of the trickiest parts is to avoid diluting the “combined risk” through averages. Our solution is to introduce a density factor that captures the relative number of vulnerabilities affecting the asset.

ACT On Risk Contextual, real-time modern risk and posture calculation

Enriching asset and vulnerability context increase visibility, allowing IT security decision-makers the information needed to prioritize security activities while maintaining strong assurances. Without risk context, any approach is a sledgehammer one; an arbitrary and ad-hoc security strategy is not a strategy, and this type of approach increases risk. On the other hand, data-driven decision-making can provide quantified risk scores that are actionable, allowing a company to use a more surgical style approach; calculate contextual risk, prioritize activities, apply resources strategically based on business impact and data-driven probabilities, reduce anxiety and prevent an overwhelmed feeling of unmanageable issues while also providing high-degree security assurance.

Although the number of CVEs published each year has been climbing, placing a burden on analysts to determine the severity and recalculate risk to IT infrastructure, enriching a CVE’s context offers an opportunity to increase IT security team efficiency. For example, in 2021, there were 28,695 vulnerabilities disclosed, but only roughly 4,100 were exploitable, meaning that only 10-15% presented an immediate potential risk. Having this degree of insight offers clear leverage, but how can this degree of insight be gained?

Inputs To Calculate A Better Contextual Risk

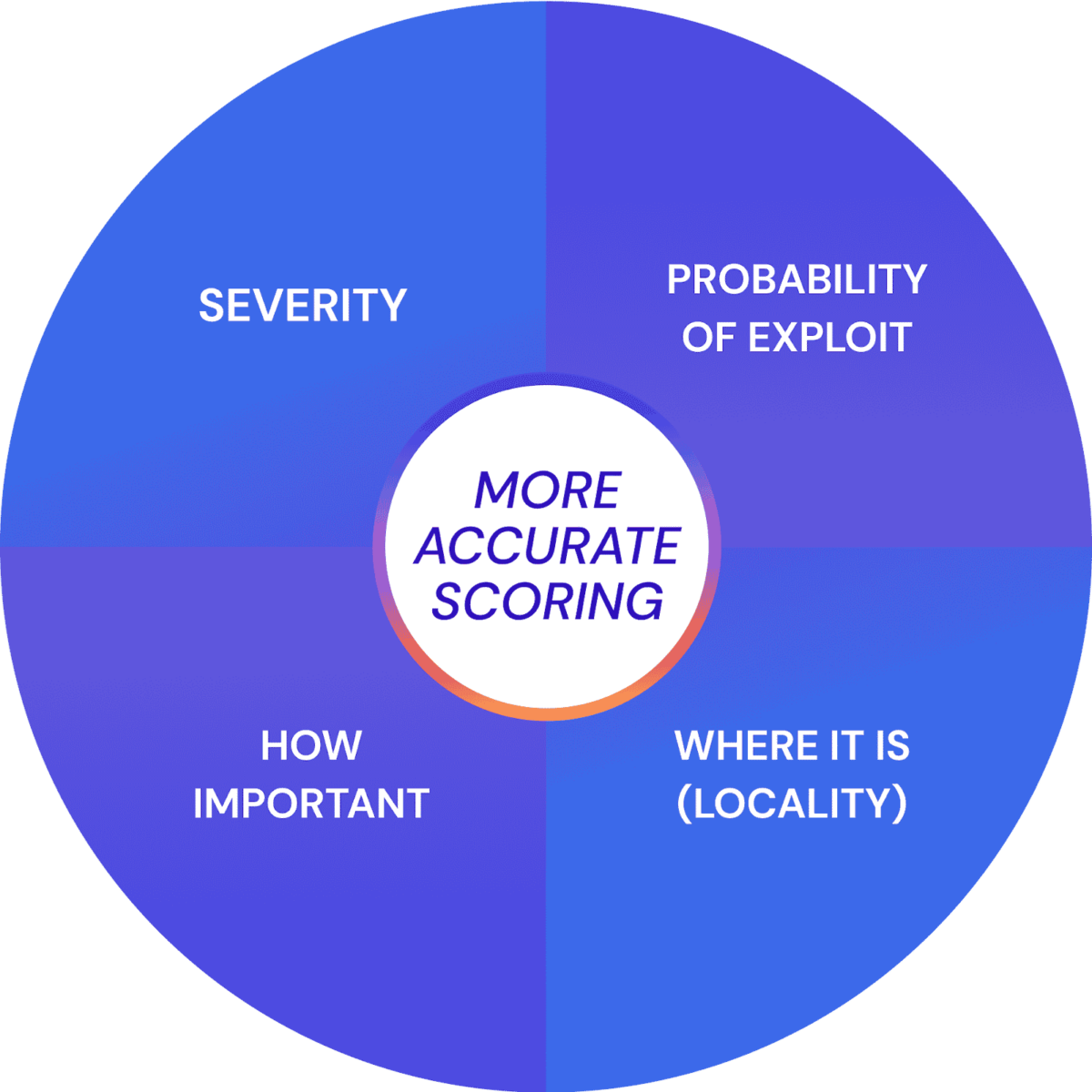

When we revisit the most basic method of calculating risk, we see that it combines impact and probability. Although this makes sense, it is only a high-level perspective and useless in practical situations. The business impact, operational criticality, inherent value, and technical locality of all IT systems and information needs to be combined with CTI data that describes the state of the current threat environment. More detailed and better-quality inputs accomplish a more accurate contextual risk score from the perspective of a particular asset or CVE vulnerability. These inputs are discussed below and shown in Diagram 1. Diagram 2 visualizes the combined inputs to show a final suggested priority position.

The severity of a vulnerability

Severity measures how much damage can be done to a target system. Regarding prioritisation, a vulnerability that allows remote code execution (RCE) without user interaction at admin level system privileges is a very high severity. However, the vulnerability is contextually less severe if the system contains not have highly sensitive data and is on a segmented network without a public attack surface. If, on the other hand, the vulnerability affects a public-facing system that contains private customer user data, it should be prioritized with a higher score.

The probability of exploitation

Exploitability measures how much opportunity a vulnerability offers an attacker. Probability depends on the exploitability of a particular vulnerability and is directly impacted by several main factors, including, but not limited to, the level of skill required to conduct an attack (known as the complexity of an attack), the availability of PoC code or fully mature malicious exploit code for a particular vulnerability, and whether the vendor of the vulnerable software has issued a security update to fix the security gap. Exploitability can also be enriched with CTI from social media and dark-web reconnaissance. All of these factors impact the greater context of risk.

If fully mature malicious exploit code is available to the general public and can be executed by a low-skilled attacker, the probability of exploitation in the wild increases significantly. On the other hand, if a security patch has been made available by the vendor and remediation can be done quickly, the priority of the vulnerability can be elevated because a simple procedure offers a high degree of protection.

How important the asset is

Importance measures the potential costs to an organization should an asset be breached and how valuable an attacker may see an asset. Value is directly correlated to the risk assurances required for an asset to be considered secure; therefore, it is a priority in security planning.

Systems and information may be critical to an organization if they are directly related to revenue-generating activities or host sensitive data protected by national or regional legal regulations such as GDPR or HIPPA or an industry standard such as PCI-DSS. Assets may also be considered especially important if they are significantly costly to replace or refresh to an operational state.

The locality of the asset

The locality measures an asset’s technical specification and placement in the network topography. A system and the information it contains may be within a local area network (LAN) or wide-area-network (WAN), on external infrastructure (IaaS) that an organization fully or partially controls, on a segmented VLAN network, behind a firewall, network intrusion and detection system (NIDS), or other security appliance, and maybe a virtual machine with a type 1 or 2 hypervisors, or use containerization to host one or multiple operating systems and software applications. The type of attacks that an asset is susceptible to and which vulnerabilities impact it depends on its locality.

- Vulnerability Severity: severity measures how much damage can be done to a target system, and it’s normally based on a CVSS score or similar.

- Probability of Exploitation: measures how easy it would be for an attacker to exploit the vulnerability; it typically includes factors like the required skills, availability of sample exploits and threat intelligence insights.

- Asset Criticality: focuses on the potential cost to the organisation should an attack be successful. This factor is directly related to the level of business importance or criticality of the applications and environments that contain this asset.

- Asset Locality: this factor reflects the position of the asset within the organisation’s networks and infrastructure; in particular, it focuses on whether the asset is exposed externally, completely internally, or somewhere in between.

Automating The Whole Process

While operational efficiencies can be gained through the accurate contextualisation of cyber-risk to more effectively prioritize vulnerabilities, operational efficiencies can also be gained by automating the process of collecting related data and calculating a set of contextual risk scores for a particular system, sub-group of systems, or IT infrastructure across an entire enterprise.

Enriching CVE data is prohibitively time-consuming and complex so delegating this task to an IT security team detracts from actual remediation activities. Instead, by presenting human analysts and IT security administrators with an easily accessible list of security priorities by risk score, they can focus on the triage processes such as remediation, responding, developing adjusting controls and policies, or developing training programs.

Conclusion

No matter how risk is calculated, it should be dynamic, automated and based on more threat signals.

Security teams are overly stretched to cover and do a manual assessment. 54% of security champions and professionals are considering quitting due to the overwhelming complexity and tediousness of some of the tasks that can be automated.

New security professionals are struggling to get into triaging as the knowledge barrier required to assess vulnerabilities is incredibly high.

The more the assessment process can be automated, the better it is. This methodology makes the job of security professionals who can act in a consultative way and scale much better.

Scaling the security team and ensuring that security champions and vulnerability management teams can help to decide how to fix instead of trying to triage and decide what to fix.

How Can Phoenix Security Help

Appsec phoenix is a saas platform that ingests security data from multiple tools, cloud, applications, containers, infrastructure, and pentest.

The phoenix platform Deduplicate correlates, contextualises and shows risk profile and the potential financial impact of applications and where they run.

The phoenix platform enables risk-based assessment, reporting and alerting on risk linked to contextual, quantifiable real-time data.

The business can set risk-based targets to drive resolution, and the platform will deliver a clean, precise set of actions to remediate the vulnerability for each development team updated in real-time with the probability of exploitation.

Appsec phoenix enables the security team to scale and deliver the most critical vulnerability and misconfiguration to work on directly in the backlog of development teams.

Request a demo today here